2025

Lunar Twins: We Choose to Go to the Moon with Large Language Models

Xin-Yu Xiao, Yalei Liu, Xiangyu Liu, Zengrui Li, Erwei Yin, Qianchen Xia

Annual Meeting of the Association for Computational Linguistics (ACL) 2025 Accepted

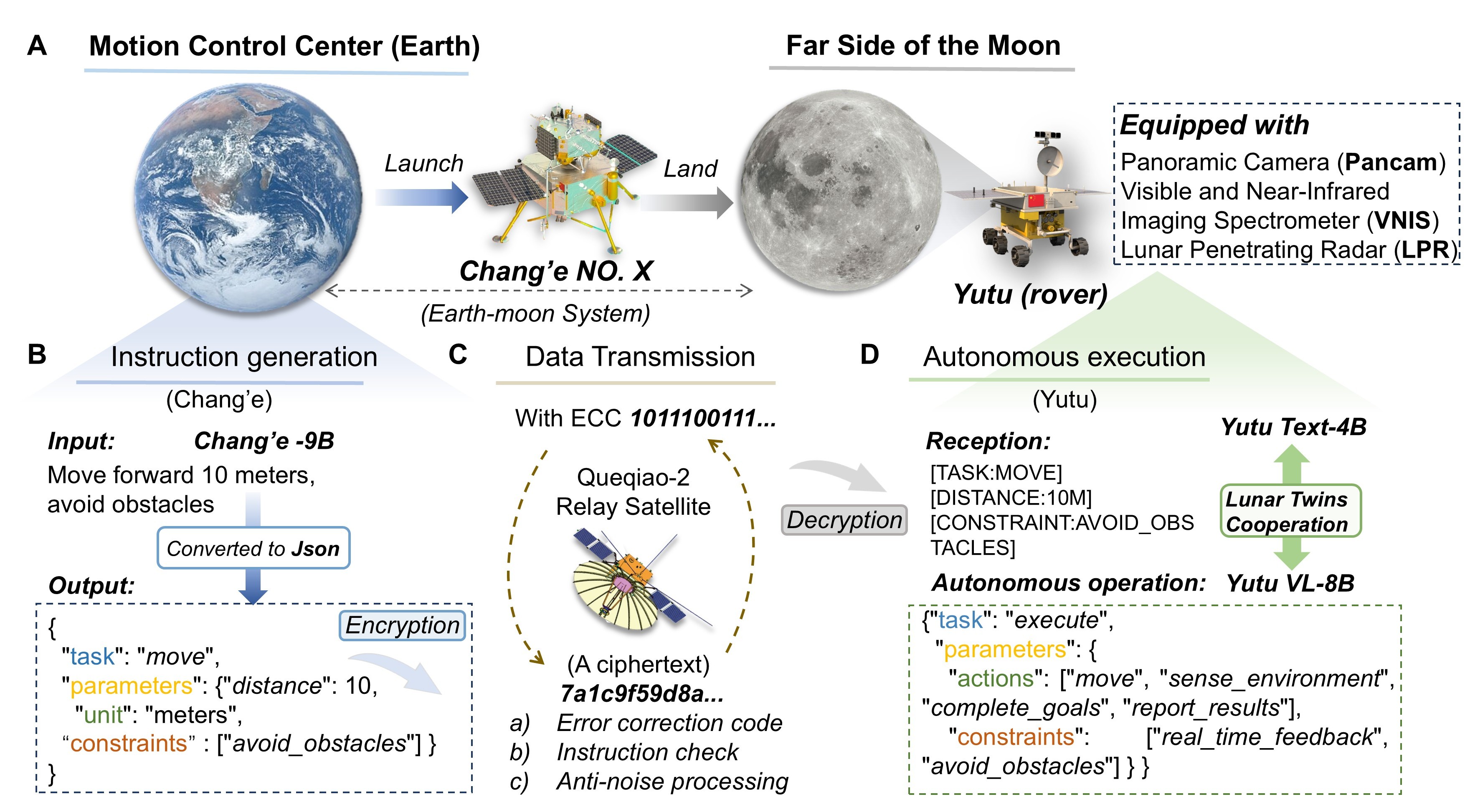

This paper presents Lunar Twins, the first large language models specifically designed for lunar exploration. The system includes the Chang’e and Yutu models, introduces a collaborative multi-agent workflow (Lunar_GenData), and establishes the first specialized lunar dataset integrating data from the Chang’e missions. Extensive experiments show that Lunar Twins significantly outperform comparable models in domain expertise and hint at embodied intelligence potential.

Lunar Twins: We Choose to Go to the Moon with Large Language Models

Xin-Yu Xiao, Yalei Liu, Xiangyu Liu, Zengrui Li, Erwei Yin, Qianchen Xia

Annual Meeting of the Association for Computational Linguistics (ACL) 2025 Accepted

This paper presents Lunar Twins, the first large language models specifically designed for lunar exploration. The system includes the Chang’e and Yutu models, introduces a collaborative multi-agent workflow (Lunar_GenData), and establishes the first specialized lunar dataset integrating data from the Chang’e missions. Extensive experiments show that Lunar Twins significantly outperform comparable models in domain expertise and hint at embodied intelligence potential.

Integrating Wavelet Transforms into Image Reconstruction Networks for Effective Style Transfer

Yunfei Chu, Xin-Yu Xiao, Longchen Han, Yaoshun Yue, Maohai Lin

Journal of Imaging Science and Technology 2025 Published

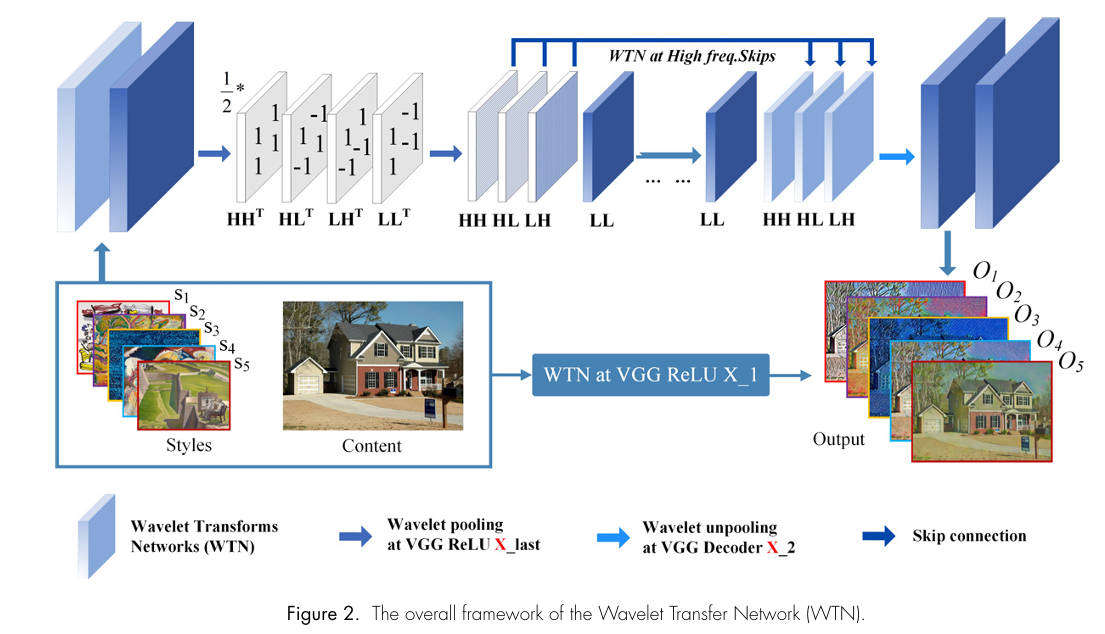

This paper presents an effective method for image style transfer by integrating wavelet transforms into whitening and coloring processes within image reconstruction networks. The proposed Wavelet Transfer Network (WTN) directly aligns the feature covariance of content and style images, yielding high-quality stylized outputs with enhanced efficiency and generalization. Experimental results demonstrate the superiority of WTN over existing methods in both arbitrary and photorealistic style transfer, setting a new benchmark in the field.

Integrating Wavelet Transforms into Image Reconstruction Networks for Effective Style Transfer

Yunfei Chu, Xin-Yu Xiao, Longchen Han, Yaoshun Yue, Maohai Lin

Journal of Imaging Science and Technology 2025 Published

This paper presents an effective method for image style transfer by integrating wavelet transforms into whitening and coloring processes within image reconstruction networks. The proposed Wavelet Transfer Network (WTN) directly aligns the feature covariance of content and style images, yielding high-quality stylized outputs with enhanced efficiency and generalization. Experimental results demonstrate the superiority of WTN over existing methods in both arbitrary and photorealistic style transfer, setting a new benchmark in the field.